|

RESEARCH AREAS Motion Picture Parsing and Summarization |

MOTION PICTURE PARSING AND SUMMARIZATION

We are interested in parsing video for postproduction and archiving applications. On the one hand we seek frame-accurate descriptions of shot boundaries, frame-approximate descriptions of camera moves, shot-by-shot descriptions of framings and groupings, and a framework for semantic analysis of content. On the other, we want to devise rich static visualizations of shots -- reverse storyboards -- that allow the story of the shot to be told economically and unambiguously.

SAM

At the lowest level we use a real-time mosaicing method called Simplex Adapted Mesh (SAM) [*] that estimates frame-to-frame projective transforms robustly. This is applied within a causal dual-mosaic framework [*] that allows the full shot mosaic to be built without revisiting inaccurate interframe estimates. SAM was first realized in a system for real-time interactive image mosaicing.

- Here's a real-time mosaic constructed from a webcam video of an office. The camera pans and tilts freely and the centre of rotation is about 10 cm from the optical centre. Note the visible error by the end of the sequence. Although the algorithm self-corrects when areas are revisited, its model of the motion is perspective projective, which is only exact for pure rotation of the camera.

- A real-time mosaic of a garden. This video suffers less accumulated error because the scene is far away relative to the distance between the optical centre and the centre of rotation.

- When used interactively, SAM displays monochrome frames when it can't find a good frame-to-frame projective transform. This is illustrated in this example showing real-time mosaicing of a tower.

- Arbitrary camera movement over a plane, in this case, a poster of Michael Faraday, yields a projective transform between frames, so SAM can construct an accurate mosaic even though the video is jerky.

ASAP

In research on automated movie analysis[*], we have developed an Automated Shot Analysis Program (ASAP) that parses movies in a way that is useful for people in the production industry. Because its underlying technology is SAM ASAP has no problem handling black-and-white footage, cartoons, or any other kind of source material.

- Here's an automatically generated log for footage from a music video called Stargazer.

- An automatically generated match dissolve for Stargazer created by giving our program the first and third shots in this sequence and asking it to find the best shot to put between them to bridge the movement.

- Here's an automatically generated log for the first 20 minutes of the Steve McQueen film Le Mans, which we use to assess logging accuracy because it has both very short shots (e.g. Shots 250-255) and fast foreground motion (e.g. in Shot 85).

SALSA (separate page) is a semi-automated extension to ASAP suitable for use in postproduction and archiving.

Reverse Storyboarding

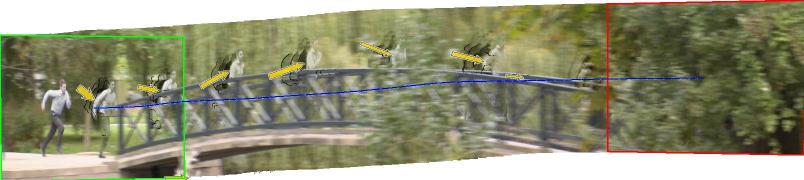

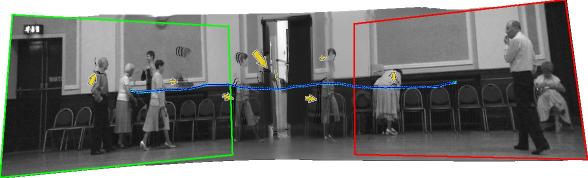

We are continuing to research reverse storyboarding methods [*] [*] for video summarization. This work, done in collaboration with Bob Dony of the University of Guelph, has yielded visualizations that combine storyboard metaphors like onion skins and streak lines with framings and arrows. The top example here shows a relatively simple shot with object and camera motion in the same direction. The bottom example is much more complicated with several people moving in different ways within a panning shot.